The visual effects (VFX) industry is undergoing a remarkable transformation as artificial intelligence (AI) advances at an incredible pace. With text-to-image and image-to-video tools now capable of generating high-quality visuals in seconds, artists and studios are increasingly exploring how AI can enhance workflows without sacrificing precision.

Companies like Black Forest Labs and Stability AI, akin to traditional render engines like Arnold and Renderman, are at the forefront of developing powerful AI models. These tools are evolving rapidly, shifting away from text-only prompts and moving toward deeper artistic control, modular AI systems, and bidirectional collaboration between artists and machines.

AI’s Strength: Speed – VFX’s Strength: Control

One of the biggest advantages AI brings is speed. AI image generators can produce high-quality visuals in seconds, accelerating ideation, iteration, and concept art workflows. However, VFX tools have always prioritised control and precision—frame-by-frame refinements, detailed PBR texturing, physics-accurate lighting, and fine adjustments to motion and composition. The future of VFX will see these two strengths converge, blending AI’s rapid iteration with the modular flexibility and precision of professional post-production tools.

AI Moving Beyond Text Prompts

AI models are evolving past simple text-based prompting, incorporating more artist-driven controls such as:

• ControlNet – Enables quick pose and structure control for characters on generation & reiteration.

• Reference Images & Style Conditioning – Ensures better consistency across multiple image generations.

• LoRAs – Small sub-models that enhance specific details (e.g., skin textures, character consistency).

While these techniques improve AI’s usability, they also increase model size and VRAM requirements, making real-time iteration challenging. This is driving the shift toward smaller, specialised AI models that function as plugins rather than monolithic generators.

The Shift Toward Modular AI Models

Stability AI looks like it is steering towards a modular AI approach, breaking down large models into specialised stages. This kind of approach should allow different parts of an AI pipeline, like text-to-image transformers, style adapters, or image refiners—to be trained and executed independently. This modular shift means: smaller, specialised AI tools instead of a single massive model. Faster iteration and fine-tuning without loading enormous datasets. Artists using LoRAs like plugins, loading only what’s needed (e.g., specific details, lighting effects).

This mirrors how VFX artists layer shaders, composite effects, and render passes. AI is gradually integrating into post-production, assisting rather than replacing the existing creative pipeline.

Examples of Innovative AI Tools that could enhance VFX Workflows

New AI tools are revolutionizing creative workflows, offering greater flexibility and control:

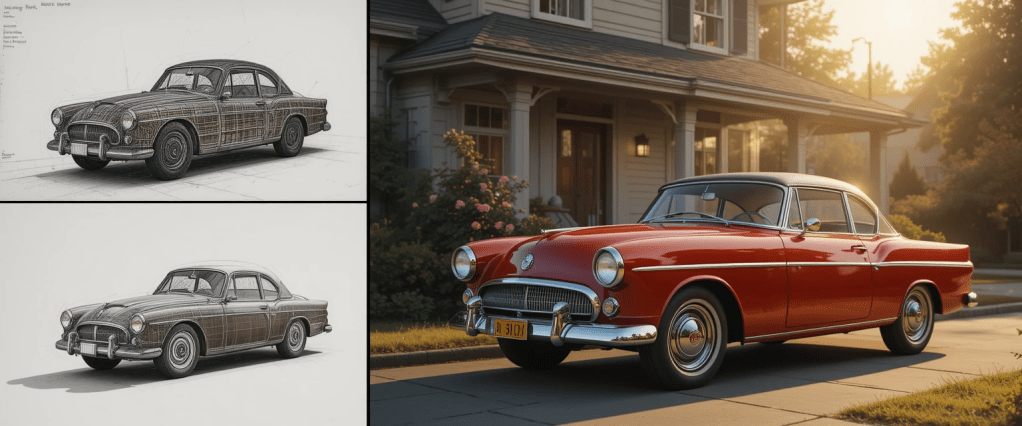

🎥 TrajectoryCrafter: Reinventing Camera Movements, allows artists to redirect camera trajectories in existing monocular videos, enabling good post-production camera adjustments.

• Instead of being locked into a single shot, artists can reposition the camera after rendering, refining angles dynamically. It uses a diffusion-based model to preserve 3D depth and motion, ensuring good realism even when altering perspectives.

This is a time saver for VFX, animation, and virtual production, as it promises the ability to re-editable cinematography without costly re-renders. Of course this is just one the first, and it is pretty good first attempt, we will rapidly see how these tools integrate with production worksflows.

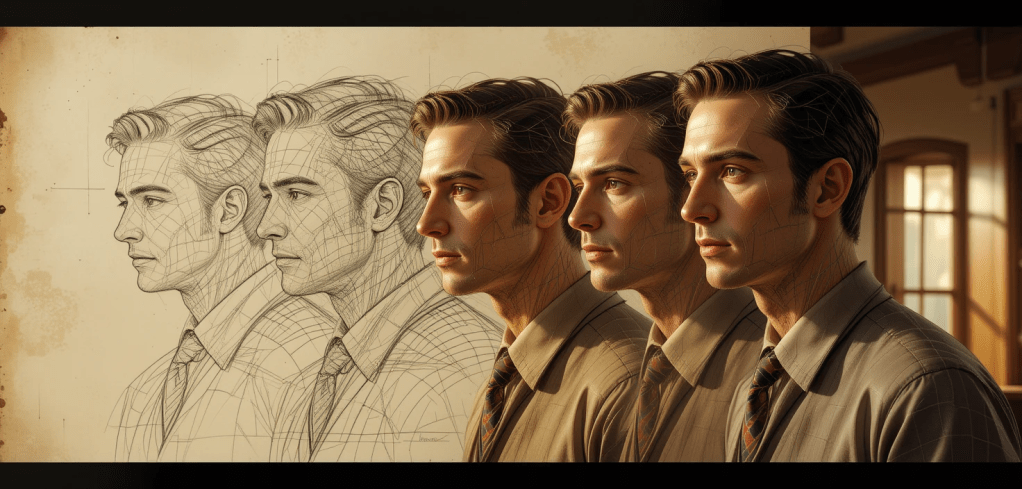

🎭 ACE++: Achieving Character Consistency: Maintaining consistent character details across images has always been a challenge. Traditionally, this required retraining AI models (e.g., LoRAs) for every character, adding complexity and increasing VRAM demands. ACE++ offers a new solution:

• It is hoped that Artists will be able to generate consistent characters from a single input image, no additional training required. It currently uses context-aware content filling, trying to ensure characters retain their identity across different prompts and scenes, and importantly when local edits are made to a portion of the image, without changing the good parts during regeneration.

Can AI Understand What It Creates?

A major hurdle in AI development remains bidirectional comprehension, the ability to both generate images and accurately interpret them. Text-to-Image AI can create stunning visuals, but does it truly understand them? Image-to-Text AI should describe images with precision, yet it struggles with spatial awareness and object relationships.

The real test of AI’s evolution will be when text → image → text cycles produce near-identical results, proving semantic understanding. Until then, human artists remain the essential “quality control” layer, ensuring AI-generated content aligns with artistic intent.

AI and Artists: A Collaborative Future

Even if AI achieves perfect text-image-text translation, it will still need to adapt to how each artist thinks, speaks, and describes objects differently. This is why artists must actively engage with AI tools, training them to understand their unique creative style.

• AI should learn how each artist describes shapes, lighting, motion, and textures.

• The best results will come from collaborative learning, where AI adapts to the artist, rather than forcing the artist to adapt to AI.

Right now, data transformers are still relatively simple, making this the perfect time to get involved. We are already seeing multimodal AI solutions combining: Text, Sketches, Image Inputs – Pose & Depth Data for True Scene Control – Real-Time Artist Feedback for Iterative Refinement. This moment in the current AI workflow, where AI listens, adapts, and collaborate, is the moment JUST before where true bidirectional artist-AI interaction begins.

AI is a Creative Partner, Not a Replacement

AI is accelerating workflows, but it is not replacing human creativity. Instead, it is becoming an intelligent toolset, helping VFX artists: Iterate faster without compromising quality, Explore creative possibilities beyond manual workflows. Integrate seamlessly into existing VFX pipelines.

The future of VFX is not AI vs. artists—it’s AI + artists, working together to push creative boundaries faster, further, and with greater control than ever before.

What’s Next in the AI for VFX Series?

The next post in this series will focus on how artistic skills and AI tools intersect. While anyone can generate an image with AI, VFX professionals have a unique advantage:

• Drawing skills and character posing expertise help refine AI input methods, without blindly changing input prompts.

• A refined sense of motion, scale, and composition allows better prompt engineering and direction.

• Understanding materials, reflections, and lighting behavior gives an overwhelming edge in realistic AI-driven renders.

AI tools may generate fast results, but it’s an artist’s eye that determines what looks “right.” Just like VFX professionals develop their observational skills over time, mastering AI as a creative partner is another skill to refine. We will discuss:

AI tools complement traditional artistic skills.

The importance of visual observation and refinement in AI-assisted workflows.

Practical strategies for leveraging AI while maintaining creative control.

Stay tuned as we bridge the gap between traditional VFX skills and AI-powered workflows!